The Ghost In The Machine — Part Two

PART TWO

THE CLASH OF TWO CULTURES

In 1989 a graduate from Oxford University, England, Tim Berners-Lee invented the World Wide Web. He envisioned the “WWW” as an internet-based hypermedia initiative designed for global information sharing. In 1990 while working at CERN (the world largest particle physics laboratory) Berners-Lee wrote the first World Wide Web server, “httpd”, and the first client, “WorldWideWeb” what-you-see-is-what-you-get hypertext browser/editor, which ran in the NeXTStep environment. He began this work in October 1990, and the program “WorldWideWeb” was first made available within CERN in December, and then on the Internet at large by the summer of 1991.1 By December 1991 the first web server outside of Europe was installed at the Stanford Linear Accelerator Centre.2

In the early formative years physicists were engaged in the design of the web. Many exceptionally skilled individuals with backgrounds in electrical engineering, mechanics, physics, mathematics, and computer science, also focused their attention on this significant scientific development. For the most part they stored and exchanged electronic data amongst themselves, advancing web development for the avails of the science community. The network gained a public face in the 1990s. On August 6, 1991 CERN, which straddles the border between France and Switzerland publicized the new World Wide Web project, two years after Tim Berners-Lee had begun creating HTML, HTTP and the first few Web pages at CERN. An early popular Web browser was ViolaWWW, based upon HyperCard.3 The Mosaic Web Browser eventually replaced it in popularity. In 1993 the National Centre for Supercomputing Applications at the University of Illinois at Urbana-Champaign released version 1.0 of Mosaic,4 and by late 1994 there was growing public interest in the previously academic and technically centric Internet. It effected rapid and profound changes, altering the way people communicated, entertained, built communities, exchanged ideas, and conducted business. By 1996 the word ‘Internet’ was coming into common daily usage, frequently misused to refer to the World Wide Web. In 1994 the web went from 700 web sites to 12,000 in one year, a staggering growth of 1,600%.5 The personal computer with access to the World Wide Web unleashed an unprecedented free for all in the evolution of human communication and interaction, the new technology becoming an omnipotent tool.

During this period, the majority of web designers actively developing web sites for the internet, had backgrounds in the sciences; computer programmers with degrees in computer science, technically adept in the world of programming languages, the laws of physics, and the patterns of mathematics. They approached their task from a technical perspective, proficient and analytical in the computer applications. The main emphasis was limited to a purely technical focus, with a requisite skill in programming overriding all other considerations. In 1994 when the majority of web sites started to appear, traditional communication designers, and practitioners within the visual media’s were denied access to the process of site building, their necessary programming skills to create a basic web page deemed inadequate. Programming skills were for the technically minded — artists and designers relegated to creating simplistic images or developing text. As the web grew, companies recognized the role and potential of the Internet as a vital marketing device. The position of ‘Web Designer’ soon evolved, a specialist in this burgeoning area of design and marketing. Again, the emphasis on the web designer’s requisite skills was placed on a degree in computer science, graphic designers failing to supply the necessary science and technical requirements.

The designers of web sites clearly adopted a mechanistic view, their focus on the technology, the software, the computer, and the complexity of the design and application. The standard model for interface design was technically proficient, and the applications did exactly what was expected. The computer user, well versed in the technical language of the computer, communicated with ease and independence. Conversely, the computer user deficient in the required technical literacy, essential to successful and productive interaction with the machine, was faced with, and many years later continues to be faced with, a defective, dysfunctional relationship with a machine that has evolved to a position of global pre-eminence.

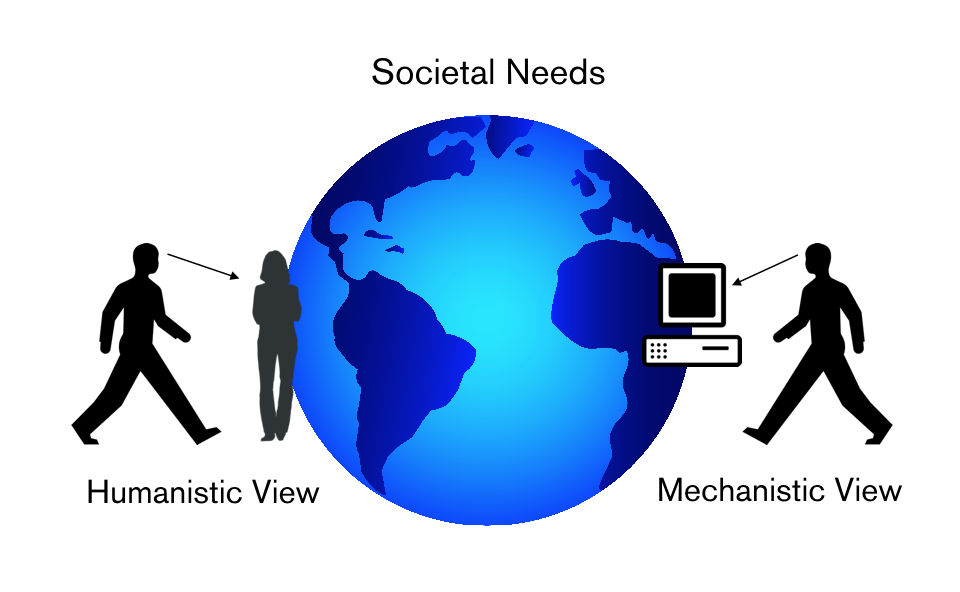

In his book The Human Factor – Revolutionizing the Way We Live with Technology (2004)6, Kim Vicente describes two worldviews. Individuals trained in the Cyclopean Mechanistic worldview were considered Wizards, in charge of designing technology. Those individuals trained in the Cyclopean Humanistic worldview generally lacked the technical know-how to build dependable technological systems, resulting in the Wizards being placed in charge, controlling and designing technology. The belief that the Humanists were technically challenged limited their worthiness to contribute anything of value. Wizards were given the torch to carry; they were the authority when it came to designing the interface for Human Computer Interaction (HCI). Technological design and implementation solely reflected the Mechanistic worldview of the wizards, any Humanistic component dismissed and negated.

Figure 1

Figure 1

Vicente’s description of two static worldviews, one technological, and one humanistic; one dominant, the other insignificant, emphasizes the gulf that exists in the development and design of new technology on one hand, and the consumption and requisite interaction by the user on the other. When we sit in front of a computer and navigate the interface, it involves an interaction that we assume will be a seamless flow between two systems, the engagement of our cognitive faculties with the program design of the machine. There could and should be an intuitive connection between the user and the application the user is attempting to communicate with. Human interaction over the Internet has become an integral part of how we communicate. Business associates, acquaintances, strangers, families and friends, conduct their daily lives, reliant on a technology that in most cases, falls short of any measured consideration for the human user in the flow between the two systems. Oversights and limitations continue to stifle communication between the technology and the user, the man and the machine. In 1959 C.P. Snow, a professor at Cambridge University, expressed his concern with the intellectuals of western society, and the division forming between two camps, a split between the technical and analytical thinkers, and the proponents of a creative/Humanistic assumption. This breakdown of communication between the two cultures, sciences and the humanities, he feared, would become a major stumbling block to solving critical world issues. The expansion of the Internet, and the consequent cultural and social dependence on it by a significant percentage of the world’s population, has placed the computer and all its functions, in a vital role. The march of technology dominated by ‘the mechanistic worldview of the wizards’, must find a sense of equilibrium with the humanistic worldview, the clash of two cultures resolved. “There seems then to be no place where the cultures meet…The clashing point of the two subjects, two disciplines, two cultures — of two galaxies, so far as that goes — ought to produce creative chances. The chances are there now. But they are there, as it were, in a vacuum, because those two cultures cannot talk to each other.”7

C.P. Snow

HUMAN COMPUTER INTERACTION

In the world of technological innovations, users are dependant upon User Interface Design, which permits human interaction with the devices that we employ on a daily basis. Our culture is shaped and defined by electronic ‘millstones’ — PDA’s, pagers, pocket PC’s, mobile phones, and the ubiquitous iPod. The necessity to be electronically connected 24hrs a day is now indisputable, our homes a testament to electronic consumer products — coffee makers, microwave ovens, dishwashers, televisions, computers, clocks, radios etcetera. Technology alone did not gain user acceptance, but more so the user experience, how the user experiences, and interacts with the product. The resulting successful human interaction with a machine is through effectual User Interface Design.

Within the short history of technology, and our relationship to and with all things electronic, are footnotes of failure, a litany of bad design and ‘user unfriendly’ objects seemingly produced for our convenience. The VHS home video player/recorder, destined for millions of households around the world, soon became famous for being extremely difficult to record TV shows at future dates, program and set up the time; the user interface cluttered with multiple flashing lights, buttons, and settings. The instruction manuals that accompanied these devises were vast and confusing. Undue amounts of time were spent going through the step-by-step instructions only to discover that the instructions failed to effectively communicate the intended task. “I never did manage to learn how to set the clock, or programme it, but – hey – who cares? It was mostly used to play movies, and only very occasionally to record off air. Over what seems to have been a very short time period the cupboard full of VHS tapes has been replaced by DVDs, which in turn are systematically being transferred to a domestic server.”8

A well-designed tool should contain visual clues and cues to aid and expedite the process of use. Based on an intuitive response, a hammer looks like a device for hammering, just as a pair of scissors looks like a device for cutting. A well-designed doorknob signals whether it should be pushed, pulled, or turned, certain features providing strong clues to its operation. However, in the visual world of interface navigation, user tools are not obvious to the novice technician, lacking subtle visual cues to invite interaction. In his book “The Psychology of Everyday Things”9, HCI researcher, author, and leading cognitive scientist in the field of user interface design Donald Norman describes a critical element in the design aspect of an object, which suggests how the object should be used, those important visual clues to its function and operation. If a poorly designed doorknob does not contain any clues as to its function, then we have to experiment until we can determine how it works. Norman employs the term affordance, the perceived and actual properties of an object that promote the possibility for use. Ease of operation takes on a new relevance in the context of computer technology. Little or no affordance has been considered in the development of the systems of interaction, in our quest to access what we need and desire. Well-designed interfaces should be based on solid design principles that enhance use. Good design should communicate directly with users through the appropriate placement of visual clues, hints, and affordances.

The essential function of the interface cannot be underestimated in the interactive process between man and the machine. It is the point where interaction occurs between two systems, the decisive moment of communication. Our reliance on the ability to communicate with various interfaces is increasing exponentially as we are faced with using diverse types of interfaces on a daily bases; from waking in the morning, to securing our home, driving, accessing mail, money, food, transportation, information, attending school and conducting business. It is a process that demands knowledge of the design language, which in the case of an interface, is a visual language that uses icons and other symbols to convey meaning. The structure and appearance of this design(ed) language, which precipitates the flow of communication, should be the artful combination of three primary elements; the objects, icons, colour and functions that appear on the computer screen, the consideration given to organising principles, which include the interface grammar (how the design objects will be pooled to convey meaning), and qualifying values that take in account the medium’s context. The manipulation of these design language components, used to construct an interface, directly impacts the resulting level and quality of communication between the user and the machine. The divide between two cultures, the sciences and the humanities, articulated by C.P. Snow in the Rede Lecture in 1959, has continued to exist with the primary development of the design language of computer interface still managed by the wizards. The function of the designer remains trivialized, the engineers and programmers maintaining control over the software development process, working to bring life to the software from the inside out, rarely considering users’ needs during the development process. “The gurus protect their position of power by keeping the software and hardware sufficiently difficult for the average user- thereby protecting their investment in computer knowledge. It is essential that the average user of technology play a primary role in the development of easy-to-use computer hardware and software.”10

This critical fault in the system, and the resulting effects on an angst-ridden population of computer users, warrants diligent scrutiny. Mitchell Kapor, the designer of Lotus 1-2-3 delivered the “Software Design Manifesto” to a group of leading computer industry executives. Although a wizard himself, he recognized the deficiency with the existing hierarchy and proposed that engineers should build systems, programmers should write code, and designers should be ultimately responsible for the overall conception of software products, in ways that would support the users’ actual needs.

“Scratch the surface and you’ll find that people are embarrassed to say they find these devices hard to use. They think the fault is their own. So users learn a bare minimum to get by. They under-use the products we work so hard to make… The lack of usability of software and the poor design of programs are the secret shame of the industry.”11

There are billions of interfaces that are designed by an individual(s) for the express use of an end user. The content and interaction may be passive and entertaining, but in many instances the interaction has far reaching consequences, effecting personal safety and relaying critical information. A Road Test and Review of the BMW 745i in Car and Driver, June 2002, revealed numerous examples of poor interface design, surprisingly present in the symbol of state of the art car design. “Then again, the 745i may also go down as a lunatic attempt to replace intuitive controls with overwrought silicon, an electric paper clip on a lease plan. One of our senior editors needed 10 minutes just to figure out how to start it (insert the key, step on the brake, then push the start button), five minutes to drop it into gear (pull the electronic column shifter forward, then up for reverse or down for drive), and then two weaving miles to decode the arcane seat controls (select which quadrant of the seat you wish to adjust from one of four buttons located near your inboard thigh, then manoeuvre the adjacent joystick until posterior bliss is achieved)—all of which made him so tardy to pick up his kid that he rushed, only to get bagged by a radar boy scout within sight of the school.”12

The dismissive attitude toward the end user obvious in so many interface design applications acts as a cumulative, global malady. The permeation of computer technology into our culture assures that every user and consumer of the technology will be continually faced with suffering the symptoms of bad design.